In this article on Deep Learning, we will discuss the Restricted Boltzmann Machine in Python with its applications.

Deep Belief Network

A strong generative model called a Deep Belief Network (DBN) uses numerous layers of “Restricted Boltzmann machines” in a deep architecture (RBM). Each RBM model transforms its input vectors nonlinearly (similar to how a standard neural network functions) and outputs vectors that are used as inputs by the following RBM model in the series. This gives DBNs great flexibility and makes it simpler for them to grow.DBNs can be applied in both supervised and unsupervised problem statements. They can be used in both environments i.e. supervised and unsupervised because they are generative models. DBNs are capable of performing feature learning, extraction, and classification tasks, which are employed in several applications. For example, in feature learning, we perform layer-by-layer pre-training on the several RBMs that make up a DBN in an unsupervised way, and then, after fine-tuning them on a limited labeled dataset, we perform classification and other tasks.

Boltzman Machine

To give you some backstory, Boltzmann machines are titled after the Boltzmann distribution, which is a component of statistical mechanics and aids in our understanding of how variables like entropy and temperature affect quantum states in the study of thermodynamics. It is also referred to as the Gibbs distribution and the Energy-Based Models (EBM). ” Geoffrey Hinton and Terry Sejnowski” created them in 1985.

No output nodes exist. They have this non-deterministic trait because of this, which may appear weird. They lack the conventional 1 or 0 type output that Stochastic Gradient Descent uses to learn and optimize patterns. They are unique because they can learn patterns without having that capacity. One thing to keep in mind is that Boltzmann Machine has links between input nodes, unlike typical neural networks which do not. The graphic shows that every node, whether it is an input node or a hidden node, is connected to every other node. This enables them to exchange information and produce additional data on their own. We don’t measure what is on the hidden nodes; we only measure what is on the exposed nodes. When the input is given, they are enough capable to record all the parametric values, all data patterns, and correlations. Due to this, they are known as Deep Generative Models and belong to the Unsupervised Deep Learning category.

Restricted Boltzmann Machine

The Boltzmann machine consists of a number of nodes such as visible and hidden nodes. These nodes are interlinked with the connections but RBMS varies from BM in this way. However, in other aspects, both machines are the same. As demonstrated below:

- The RBM model is considered a part of the energy-based model framework.

- Generic, probabilistic, and unsupervised algorithms make up the model.

- it finds the joint distribution of all coming probabilities that increases the log-likelihood function to make RBM

- The input layer and hidden layer are the only two layers of RBM, which are undirected.

- Each visible node is connected to every hidden node in the model. Due to the fact that RBM contains two layers— an input layer (always visible) and a hidden layer (contains more hidden nodes)—it is also known as an “asymmetrical bipartite graph”.

- There consists no links between the apparent nodes within the same layer. The hidden nodes are also not interconnected within the layer. Links are limited to the input and concealing nodes.

- There shouldn’t be a connection between the visible nodes in the same layer. The hidden nodes are also restricted to not being interlinked within the same layer. links should only be used to connect visible and hidden nodes.

- There was a comprehensive network of interconnected nodes in the early BM. The RBM) restricts intralayer connectivity, and gets its name.

Since RBMs are undirected, they do not adjust their weights through gradient descent and backpropagation. Contrastive divergence is a method they use to adjust their weights. In order to produce the hidden nodes, weight values for the public nodes are first generated at random. Then, using the same weights, these hidden nodes recreate exposed nodes. The apparent nodes were continually rebuilt with the same values. The resulting nodes vary from each other since they are not connected to one another.

How Does the Restricted Boltzmann Machine Operate?

In an RBM, there are no connections between any two units that belong to the same group in the symmetric bipartite graph. Optimization algorithms such as “Gradient descent and back-propagation” techniques can be used to combine many RBMs and fine-tune the makes a Deep Belief Network. Despite their infrequent use, RBMs are increasingly being replaced by” General Adversarial Networks or Variational Autoencoders” in the deep learning community.

Each provoked neuron will display a few dynamic behaviors because RBM is thought of as a stochastic network. 2 extra layers of bias units, such as invisible bias and viewable bias, are present in an RBM. This is where RBMs and autoencoders diverge. The concealed bias RBM produces the forward pass’s activation, and the visible bias helps the backward pass’s input reconstruction. The recreated input is never identical to the original input because the visible units are not interconnected and cannot share data among themselves.

The first stage of learning an RBM with numerous inputs is depicted in the above graphic. The weights are scaled by the inputs, and the bias is then added. The output of a sigmoid activation function, which is subsequently applied to the result, determines whether or not the hidden state is activated. The input nodes will make up the number of rows in the matrix containing the weights, while the hidden nodes will make up the number of columns.

k^((1))=S(v^((0)T) w+a)

where k^((1))=v^((0)) and are the equivalent matrices (column matrices) for the unseen and visible layers, with v(0) denoting the iteration and a denoting the hidden state bias vector, respectively.

Also Read: Functions In Python Explained with Examples

Before the matching bias term is added, the inputs are vector-multiplied by the earliest column of weights and sent to the first hidden node. (Remember that we are not working with one-dimensional values here; we are instead using vectors and matrices.)

The reverse phase(reconstruction phase) is now visible in above image. Identical to the first pass, but going the other way. The result of the equation is:

v^((1))=S(k^((1)T) w+a)

Restricted Boltzman Machine Applications

- Understanding handwritten language or a random pattern is a challenge in pattern recognition tasks, hence RBM is employed for feature extraction.

- RBM is frequently employed in collaborative filtering methods where it is utilized to foretell what needs to be suggested to the end user in order for the user to enjoy using a specific application or platform. For instance: Books and movies are both recommended.

- In this case, RBM is utilized to find intra-pulses in radar systems with heavy noise and very low SNR.

- RBMs were employed in the early stages of deep learning to create a wide range of applications, including dimension reduction, recommender systems, and topic modeling. However, in recent years, Variation Autoencoders (VAEs) or “Generative Adversarial Networks (GANs)” have nearly supplanted RBMs in a variety of machine learning applications.

Practical of Restricted Boltzman Machine Using Python

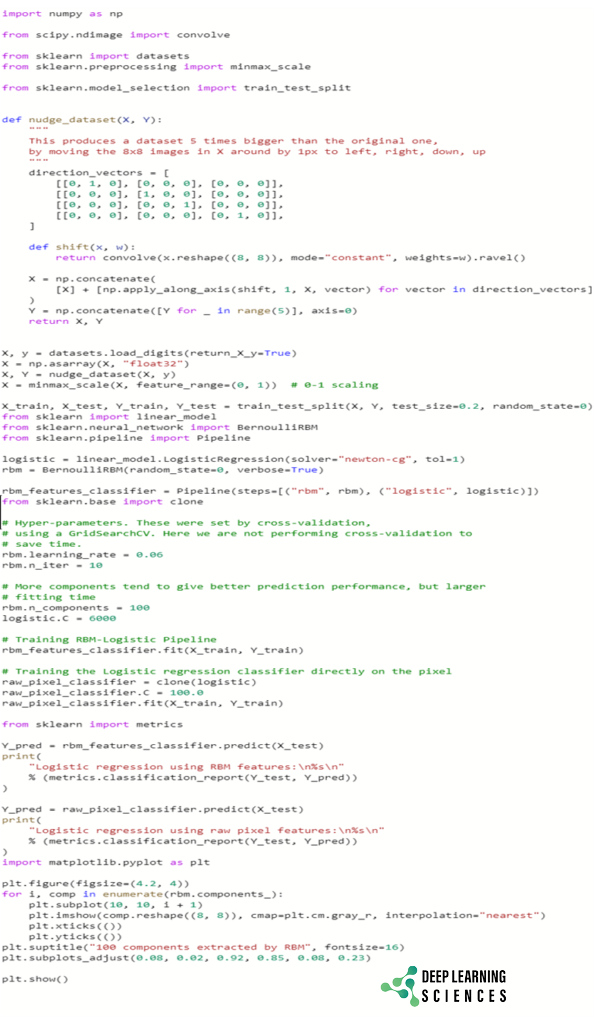

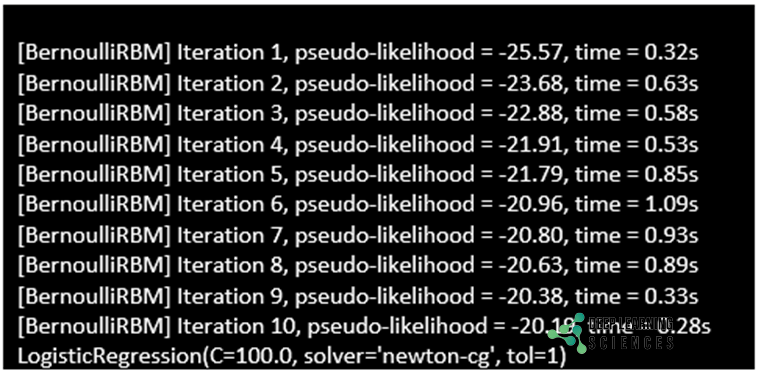

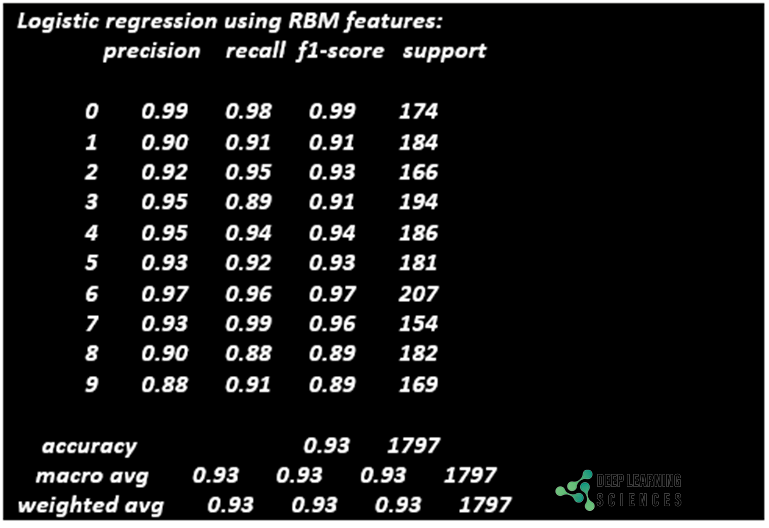

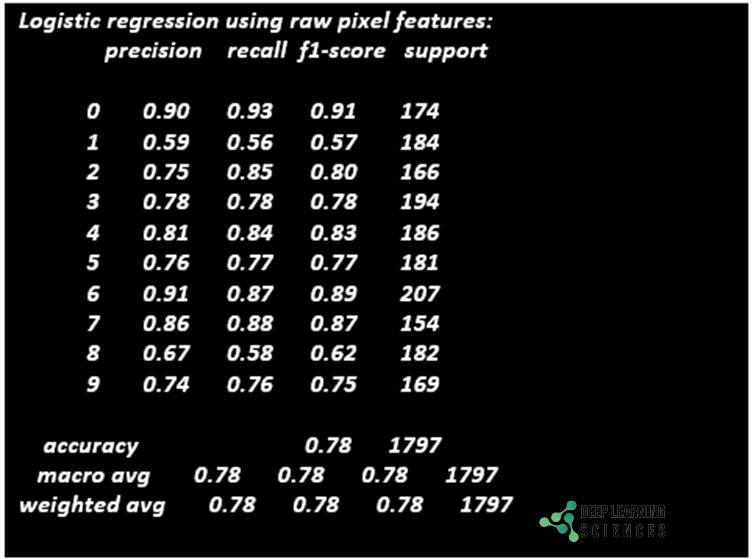

The following code is about using BernoulliRBM for digit recognition.

FAQs

Where can restricted Boltzmann machines be used?

It is used for feature selection and extraction, and is crucial for image compression, categorization, regression, and many other applications in the era of machine learning and deep learning.

What are the names of the restricted Boltzmann machine’s two layers?

The hidden or output layer and the visible or input layer are the names of the two layers that make up a limited Boltzmann machine.