Decision Tree Interview Questions with Answers: Decision trees are a popular and widely used algorithm in the field of machine learning. They are simple to understand, interpret and visualize, making them an attractive solution for many real-world problems. The ability to handle both categorical and numerical data makes Decision Trees a versatile tool for classification and regression problems. In this blog post, we will cover some of the common questions that may arise during a technical interview focused on Decision Trees.

Overview of Decision Trees in Machine Learning: Decision Trees have been widely used in many fields, such as finance, medicine, and marketing. They are known for their interpretability, which makes them valuable tools for solving complex problems. Additionally, Decision Trees can handle both categorical and numerical data, making them a versatile solution for many real-world problems.

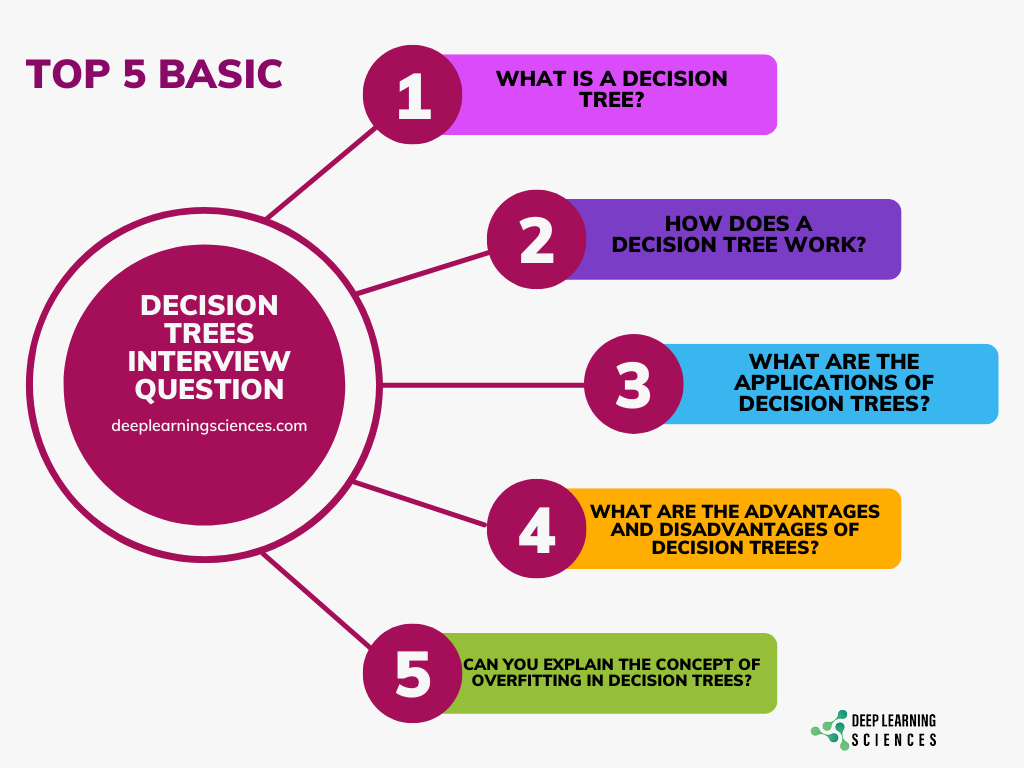

Basic Decision Tree Interview Questions

Basic interview questions are designed to assess the candidate’s understanding of the fundamental concepts of Decision Trees. These questions will cover the definition, working principle, applications, advantages and disadvantages, and key concepts such as Overfitting and Pruning. Basic interview questions are essential for determining if the candidate has a strong foundation in the subject and can apply their knowledge to real-world scenarios. By answering these questions, the candidate will demonstrate their ability to explain complex concepts in a simple and concise manner. This section will provide a comprehensive overview of the basic concepts of Decision Trees, making it an essential resource for both job seekers and interviewers.

Question: Can you explain the concept of Decision Trees?

Answer: Decision Trees are a type of machine learning algorithm that are used for both classification and regression problems. The main idea behind Decision Trees is to create a tree-like model that represents a series of decisions and their potential outcomes. The model is built by splitting the data into subsets based on the values of the features, and making predictions based on the most common class or value in each subset. Decision Trees are simple to understand, interpret, and visualize, which makes them a popular choice for many data science tasks.

Question: How does a Decision Tree make predictions?

Answer: A Decision Tree makes predictions by starting at the root node and following a series of decisions down the tree until a prediction is made. The decisions are based on the values of the features in the data, and the model splits the data into subsets based on these values. The most common class or value in each subset is used as the prediction for that subset. The process is repeated for each subset until a prediction is made for each data point.

Question: What is the difference between Gini and Information Gain in Decision Trees?

Answer: Gini and Information Gain are two metrics that are used to determine the best feature to split the data on at each node in a Decision Tree. Gini measures the probability of misclassifying a random sample and is used in Classification Trees. Information Gain measures the reduction in entropy that results from splitting the data on a feature and is used in both Classification Trees and Regression Trees. Both metrics are used to determine the best feature to split the data on, but they differ in the way they measure the quality of the split.

Question: Can you explain the concept of Overfitting in Decision Trees?

Answer: Overfitting is a common problem in machine learning that occurs when a model is too complex and performs well on the training data but poorly on new data. In the context of Decision Trees, Overfitting can occur when the tree becomes too deep and complex, leading to a model that fits the training data too closely. This results in a model that generalizes poorly to new data, leading to a high rate of error. To mitigate the problem of Overfitting, techniques such as pruning and ensemble methods can be used.

Question: What is Pruning in Decision Trees?

Answer: Pruning is a technique used to reduce the size of a Decision Tree and prevent Overfitting. The idea behind pruning is to remove branches of the tree that do not contribute to the accuracy of the model. This results in a simpler and more interpretable tree that is less likely to overfit the training data. There are several techniques for pruning Decision Trees, including reduced error pruning, cost complexity pruning, and minimum description length pruning.

Advanced Interview Questions

Advanced interview questions are designed to assess the candidate’s in-depth knowledge and experience with Decision Trees. These questions will cover advanced topics such as ensemble methods, boosting, and random forests. Advanced interview questions will test the candidate’s ability to apply their knowledge to real-world problems and make informed decisions. These questions are designed to challenge the candidate and help assess their ability to think critically and solve complex problems.

Question: Can you explain the concept of Ensemble methods in Decision Trees?

Answer: Ensemble methods are machine learning techniques that combine multiple models to form a stronger model. In the context of Decision Trees, ensemble methods involve combining multiple trees to make predictions. This approach helps to mitigate the problem of overfitting, which occurs when a model is too complex and performs well on the training data but poorly on new data. Ensemble methods work by combining the predictions of multiple trees, which reduces the variance and increases the stability of the predictions. Examples of ensemble methods in Decision Trees include Random Forest, Bagging, and Boosting.

Question: How does Boosting work in Decision Trees?

Answer: Boosting is an ensemble method that combines multiple weak models to form a strong model. In the context of Decision Trees, Boosting works by iteratively adding trees to the model, where each tree focuses on the errors made by the previous trees. The idea behind Boosting is that by combining multiple weak models, a strong model can be created. Boosting is an effective method for improving the accuracy of Decision Tree models, but it can also increase the risk of overfitting if not used carefully.

Question: What is Random Forest and how does it work?

Answer: Random Forest is an ensemble method that combines multiple Decision Trees to make predictions. The main idea behind Random Forest is to reduce the variance in the predictions made by individual trees. This is achieved by combining the predictions of multiple trees, each of which is trained on a random subset of the data. Random Forest works by randomly selecting a subset of the features at each split in the tree, which helps to reduce the risk of overfitting.

Question: How does Bagging differ from Boosting in Decision Trees?

Answer: Bagging and Boosting are two popular ensemble methods in Decision Trees. Bagging, or Bootstrap Aggregating, is an ensemble method that involves training multiple trees on different random subsets of the data. The idea behind Bagging is to reduce the variance in the predictions made by individual trees by combining the predictions of multiple trees. Boosting, on the other hand, focuses on improving the accuracy of the model by iteratively adding trees to the model, each of which focuses on the errors made by the previous trees.

Question: Can you explain the difference between a Regression Tree and a Classification Tree?

Answer: Regression Trees and Classification Trees are two types of Decision Trees that are used for different types of problems. Regression Trees are used for regression problems, where the goal is to predict a continuous value. Classification Trees, on the other hand, are used for classification problems, where the goal is to predict a categorical value. The main difference between the two is the type of prediction they make. Regression Trees make continuous predictions, while Classification Trees make categorical predictions.

Question: How do you evaluate the performance of a Decision Tree model?

Answer: There are several metrics that can be used to evaluate the performance of a Decision Tree model. Common metrics include accuracy, precision, recall, and F1 score, which are used to evaluate the accuracy of the model’s predictions. Other evaluation metrics include the confusion matrix, ROC curve, and AUC, which can be used to assess the model’s ability to distinguish between different classes. It is important to evaluate the performance of a Decision Tree model using a test set, as this helps to assess the model’s ability to make accurate predictions on new data. To improve the performance of Decision Tree models, techniques such as pruning and ensemble methods can be used.

Also Read: 2 Best Ways to Rename Columns in Panda DataFrame – Step-by-Step

Real-world Case Study Questions

In this section, we’ll look at some questions related to real-world applications of Decision Trees. These questions will test your understanding of how Decision Trees can be used in practical scenarios and your ability to apply the concepts to solve problems. These questions will also provide you with an opportunity to showcase your practical experience and expertise in using Decision Trees to solve real-world problems. These questions are typically asked during advanced-level interviews and are a great way to demonstrate your hands-on experience and expertise in Decision Trees.

Question: Can you give an example of how Decision Trees can be used in the banking sector?

Answer: Decision Trees can be used in the banking sector for a variety of tasks, such as credit risk assessment, loan approval, and customer segmentation. For example, a bank might use a Decision Tree to predict the likelihood of a customer defaulting on a loan. The model would take into account factors such as income, employment history, credit score, and loan amount, and use this information to make a prediction. The bank could use the Decision Tree to automate the loan approval process and make more informed decisions about which customers are eligible for loans.

Question: Can you explain how Decision Trees can be used for medical diagnosis?

Answer: Decision Trees can be used for medical diagnosis by building a model that predicts the likelihood of a patient having a particular condition based on symptoms, test results, and other factors. The model would start with a root node that represents a set of symptoms and then split the data into subsets based on the values of the features. The model would continue to split the data and make predictions until a diagnosis is made. The model can also be used to make recommendations for treatment based on the diagnosis.

Question: How can Decision Trees be used for customer segmentation?

Answer: Decision Trees can be used for customer segmentation by grouping customers into similar segments based on their characteristics and behaviors. The model would take into account factors such as demographics, purchasing history, and responses to marketing campaigns. The model would then use this information to split the data into subsets, where each subset represents a different customer segment. The model could then be used to make personalized marketing recommendations for each customer segment.

Question: Can you give an example of how Decision Trees can be used in the stock market?

Answer: Decision Trees can be used in the stock market to predict stock prices and make investment decisions. The model would take into account factors such as historical stock prices, economic indicators, and news events. The model would then split the data into subsets based on the values of the features, and make predictions about the future price of the stock. The model could be used by investors to make informed decisions about when to buy and sell stocks.

Question: How can Decision Trees be used for fraud detection?

Answer: Decision Trees can be used for fraud detection by building a model that predicts the likelihood of a transaction being fraudulent based on a set of features. The model would take into account factors such as the location of the transaction, the amount of the transaction, and the spending behavior of the customer. The model would then split the data into subsets based on the values of the features, and make predictions about the likelihood of fraud. The model could be used to automate the fraud detection process and identify fraudulent transactions more quickly and accurately.

Conclusion

In conclusion, Decision Trees are a popular machine learning technique that can be used for a variety of applications, including credit risk assessment, medical diagnosis, customer segmentation, stock market prediction, and fraud detection. The questions covered in this blog post, from basic to advanced and real-world case study questions, are meant to provide a comprehensive overview of the knowledge and skills required to work with Decision Trees. Whether you are preparing for an interview or simply looking to deepen your understanding of this technique, these questions and answers can serve as a useful resource. It’s important to note that these questions are not exhaustive, but they will give you a good start and a strong foundation in Decision Tree knowledge. With practice, you can become confident in your ability to solve real-world problems using this powerful machine learning tool.

FAQs

What are the benefits of using Decision Trees in machine learning?

The benefits of using Decision Trees in machine learning include their ability to handle both categorical and numerical data, their ability to handle missing data, and their ability to provide a clear and interpretable model.

What are some challenges associated with Decision Trees?

Some challenges associated with Decision Trees include overfitting, the tendency to create complex and difficult-to-interpret models, and the tendency to be sensitive to small changes in the data.

What is the difference between a Decision Tree and Random Forest?

A Decision Tree is a single tree model, while a Random Forest is an ensemble of multiple Decision Trees. The main difference between the two is that a Random Forest generates multiple trees and combines their predictions to make a final prediction, while a Decision Tree generates a single tree and makes a prediction based on that tree.